TF-IDF (Term Frequency–Inverse Document Frequency) is a text representation technique used in natural language processing and search. It assigns a weight to each term in a document based on two factors:

1. Term Frequency (TF):

- Measures how often a word appears in a document.

- Higher frequency = higher importance within that document.

2. Inverse Document Frequency (IDF):

- Measures how rare the word is across all documents in a corpus.

- Words common across many documents (like “the” or “and”) get lower scores.

Thus, common words like “the” or “and” are assigned low weight, while discriminative terms like “neural,” “SEO,” or “embedding” get higher importance.

This weighting aligns with the way search engines assess semantic relevance by giving preference to terms that meaningfully differentiate content.

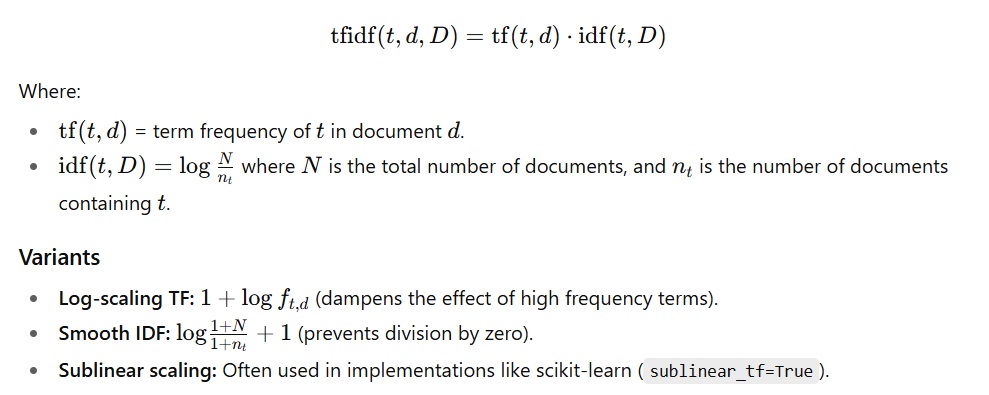

The Core Formula

The canonical TF-IDF score for a term ttt in a document ddd is:

These refinements reflect the same balancing act as query optimization in search pipelines — maximizing discriminative power while minimizing noise.

How TF-IDF Works in Practice (Pipeline)?

The TF-IDF pipeline follows a structured process:

Preprocessing

Tokenization, lowercasing, stopword removal.

Optional stemming or lemmatization.

Mirrors preprocessing steps in lexical semantics.

Vocabulary Construction

Each unique word in the corpus becomes a feature.

Large vocabularies may require pruning (

min_df,max_df).

Vectorization

Convert documents into document–term matrices with raw counts.

Transform these counts into TF-IDF weights.

Normalization

Use cosine normalization (

norm='l2'in scikit-learn) to scale document vectors for fair comparison.

The final matrix provides a structured, sparse representation ready for retrieval or classification, much like a contextual hierarchy organizes entities for better understanding.

Example Implementation (Python)

docs = [

“semantic search improves relevance”,

“TF-IDF is a baseline for information retrieval”,

“embeddings capture semantic similarity”

]

vectorizer = TfidfVectorizer(sublinear_tf=True, smooth_idf=True, norm=‘l2’)

X = vectorizer.fit_transform(docs)

print(vectorizer.get_feature_names_out())

print(X.toarray())

This produces a weighted, normalized matrix where each row is a document and each column corresponds to a term.

Why TF-IDF Became Revolutionary?

TF-IDF solved a key problem that plagued early BoW models: term dominance.

In BoW, high-frequency but meaningless terms (e.g., “the,” “is”) skewed similarity measures.

TF-IDF penalized common terms and rewarded rare terms, making retrieval and classification far more precise.

In SEO, this parallels the difference between keyword stuffing and building topical authority. Just as TF-IDF reduces the weight of filler terms, Google reduces the weight of low-value signals.

Advantages of TF-IDF

Simple yet effective: Easy to compute, highly interpretable.

Strong baseline: Still competitive for tasks like spam filtering and document classification.

Sparse efficiency: Works well with large-scale corpora using sparse matrix operations.

Transparent weighting: Clear why a term gets a higher or lower weight.

Much like a topical map, TF-IDF provides structured clarity before layering more advanced semantic models.

Limitations of TF-IDF

Despite its strengths, TF-IDF has well-known limitations:

Ignores word order → “dog bites man” = “man bites dog.”

No semantics → Cannot capture synonyms or contextual meaning.

Vocabulary sensitivity → Out-of-vocabulary (OOV) terms cannot be represented.

Document length effects → Longer documents may skew weights without normalization.

Inferior to BM25 in retrieval → TF-IDF lacks the saturation and length normalization that BM25 adds.

This limitation is why search engines evolved toward probabilistic models like BM25 and semantic models like embeddings, ultimately leading to semantic similarity and contextual embeddings.

TF-IDF vs BM25: Why BM25 Often Wins?

While TF-IDF was groundbreaking, BM25 improved upon it by introducing two refinements:

Saturating Term Frequency

TF-IDF rewards frequent terms linearly.

BM25 uses a saturation curve, rewarding early occurrences more than later ones.

Document Length Normalization

TF-IDF normalization is less robust for long documents.

BM25 penalizes longer documents more consistently.

In search, BM25 is now the standard for first-stage retrieval. Yet, TF-IDF is still used as a baseline and for interpretability. This evolution mirrors the move from basic keyword signals to more refined ranking signals in SEO.

TF-IDF vs Embeddings: Lexical vs Semantic

TF-IDF is lexical: it focuses on the words that appear. Embeddings are semantic: they capture meaning and relationships.

TF-IDF Strengths

Transparent

Sparse and efficient

Works well with linear models

Embedding Strengths

Capture semantic similarity between words

Handle synonyms and polysemy

Adapt to context with models like BERT

In practice, hybrid retrieval systems combine the strengths of both. TF-IDF provides lexical grounding, while embeddings capture contextual meaning — similar to how SEO blends keywords and entities through entity graphs.

TF-IDF in Modern Information Retrieval

Even in 2025, TF-IDF remains relevant in three key ways:

Baseline for Evaluation

New retrieval models (dense retrievers, rerankers) are benchmarked against TF-IDF.

First-Stage Retrieval

TF-IDF or BM25 quickly narrows the candidate set.

Semantic models then re-rank results.

Feature Extraction

TF-IDF features still power text classification, clustering, and recommendation.

These layered retrieval strategies echo contextual hierarchy, where systems move from surface-level signals to deep meaning.

Advanced Research and Hybrid Models

TF-IDF has inspired modern hybrid systems that combine sparse and dense retrieval:

SPLADE (Sparse Lexical and Expansion Model)

Uses transformers to expand queries/documents but still outputs sparse, TF-IDF-like vectors. It keeps efficiency while injecting semantics.DeepBoW (2024)

Extends BoW/TF-IDF with pretrained embeddings, creating hybrid representations.Neural Bag-of-Ngrams

Adds semantic depth by embedding sequences instead of raw words.

These approaches reflect how search engines blend historical lexical features with semantic embeddings to maximize trust and topical authority.

TF-IDF and Semantic SEO

The parallels between TF-IDF and SEO evolution are striking:

Downweighting stopwords in TF-IDF is like Google devaluing low-quality signals in ranking.

Rare terms gaining weight reflects the importance of covering topical coverage in SEO.

Hybrid retrieval (TF-IDF + embeddings) mirrors how modern SEO requires both keyword grounding and semantic optimization.

Just as TF-IDF balances term frequency and rarity, effective content balances entity density and context within a topical map.

In essence, TF-IDF is the SEO of the keyword era — and understanding it helps explain why search engines shifted toward entities, context, and semantic signals.

Frequently Asked Questions (FAQs)

Is TF-IDF still useful in 2025?

Yes. It remains a strong baseline, especially in short-text classification and retrieval tasks.

Why is BM25 preferred over TF-IDF?

BM25 improves document length normalization and reduces over-penalization of frequent words.

Does TF-IDF capture meaning?

No. It is purely lexical. For meaning, you need embeddings or semantic models.

Can TF-IDF and embeddings be combined?

Yes. Hybrid retrieval systems use TF-IDF for fast, interpretable grounding and embeddings for semantic depth.

What’s the SEO analogy of TF-IDF?

It represents the keyword-driven stage of SEO, before the rise of entities, query semantics, and semantic search.

Final Thoughts on TF-IDF

TF-IDF reshaped how machines interpret text by teaching them that not all words are equal. It brought us from raw frequency counts to weighted lexical features — a critical step toward modern semantic retrieval.

In SEO, this same shift marked the journey from keyword stuffing to semantic authority:

From token counts → to entities.

From raw frequency → to contextual meaning.

From isolated keywords → to structured topical hierarchies.

Understanding TF-IDF is essential for anyone exploring text representation, search, or semantic SEO — not because it is state-of-the-art, but because it shows where we started and why semantics matter.

Suggested Articles

Want to Go Deeper into SEO?

Explore more from my SEO knowledge base:

▪️ SEO & Content Marketing Hub — Learn how content builds authority and visibility

▪️ Search Engine Semantics Hub — A resource on entities, meaning, and search intent

▪️ Join My SEO Academy — Step-by-step guidance for beginners to advanced learners

Whether you’re learning, growing, or scaling, you’ll find everything you need to build real SEO skills.

Feeling stuck with your SEO strategy?

If you’re unclear on next steps, I’m offering a free one-on-one audit session to help and let’s get you moving forward.

Table of Contents

Toggle