Machine Translation is the process of converting text in one language into another while preserving meaning, style, and fluency. Unlike a dictionary lookup, MT must navigate:

- Ambiguity (words with multiple meanings).

- Grammar and word order differences.

- Morphological complexity across languages.

At its heart, translation is a problem of mapping semantic relevance between languages — ensuring that meaning, not just words, align. This parallels how search engines optimize query intent to deliver results that match deeper context.

Machine Translation (MT) has long been one of the most ambitious challenges in Natural Language Processing. It aims to make meaning travel seamlessly across languages — transforming communication, commerce, and culture.

From Statistical Machine Translation (SMT) to today’s neural systems, MT reflects the broader shift in NLP: from rule-based probabilities to contextual, semantic representations. In this first part, we’ll cover the foundations of MT and the statistical era that dominated until the mid-2010s. Part 2 will then explore transformer-based MT and its role in semantic SEO.

The Era of Statistical Machine Translation (SMT)

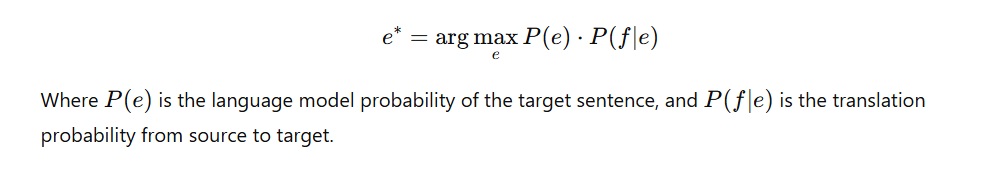

For nearly two decades, SMT defined the field. It modeled translation as a probabilistic process:

Word-Based SMT

Early IBM alignment models established the noisy channel framework, where translation was viewed as decoding a corrupted signal. These models introduced statistical word alignments and paved the way for phrase-level mappings.

Phrase-Based SMT (PBSMT)

Phrase-based SMT captured context beyond words by aligning multi-word expressions. Systems like Moses popularized PBSMT, enabling practical deployment across industries.

This shift reflected a growing emphasis on contextual hierarchy in language — grouping meaning into chunks rather than isolated tokens.

Hierarchical and Syntax-Based SMT

Later extensions like Hiero used synchronous grammars to model long-distance reordering, while syntax-based SMT incorporated parse trees. These innovations improved grammaticality but remained limited in capturing semantic nuance.

Strengths and Weaknesses of SMT

Strengths:

Transparent: phrase tables and feature weights could be inspected.

Effective in domains with rich bilingual corpora.

Still useful for constrained, domain-specific applications.

Weaknesses:

Poor handling of rare or unseen words.

Difficulty modeling long-range dependencies.

Struggled with true semantic similarity, focusing on surface alignments instead.

From an SEO lens, SMT couldn’t naturally form robust entity graphs, since it optimized probabilities rather than meaning structures.

The Transition Toward Neural MT

By 2014, Neural Machine Translation began to outperform SMT. Early RNN-based sequence-to-sequence models with attention demonstrated fluency and contextual awareness far beyond statistical methods.

This marked the pivot from statistical correlation to representation learning — embedding words and sentences in vector spaces where meaning could be transferred.

The shift was akin to moving from keyword-based indexing toward semantic content networks, where relationships, not just surface forms, drive retrieval and understanding.

Transformer-Based Machine Translation

The Transformer (Vaswani et al., 2017) introduced self-attention, replacing recurrence and convolution. This breakthrough enabled parallelization and improved modeling of long-distance dependencies, outperforming all SMT and RNN-based systems.

Why Transformers Excel?

Self-attention captures global dependencies across entire sentences.

Subword units (via BPE or SentencePiece) handle morphology and rare words.

Encoder-decoder structure with multi-head attention ensures alignment and fluency.

In essence, Transformers improved semantic relevance across translations by modeling context holistically, not just locally.

SEO Implication

By producing higher-quality, contextually faithful translations, Transformers support the creation of multilingual entity graphs, strengthening global visibility for businesses across markets.

Multilingual and Multimodal MT

Beyond bilingual systems, MT has scaled to cover hundreds of languages:

NLLB-200: Meta’s model trained on 200 languages, evaluated on FLORES-200, achieving strong quality for low-resource pairs.

SeamlessM4T: A unified speech + text model supporting speech-to-speech, text-to-text, and speech-to-text translation across ~100 languages.

These advances show how MT has evolved into a semantic content network connecting not only words but entire modalities.

SEO Implication

For global SEO, this scaling ensures consistent topical coverage across languages, which strengthens topical authority in multilingual markets.

Evaluation in Modern MT

Classic metrics like BLEU remain, but newer ones better capture meaning:

chrF: character n-gram F-score.

COMET: neural-based metric correlating with human judgment.

Human evaluation: still the gold standard in WMT competitions.

Evaluation is essentially about measuring semantic similarity between translations, rather than shallow word overlap.

SEO Implication

High-quality translation ensures accurate mapping of concepts across languages, maintaining consistent contextual hierarchy in multilingual content hubs.

Machine Translation and Semantic SEO

Modern MT has direct implications for SEO strategies:

Entity Graph Expansion: Translating content while preserving entities enriches global entity connections .

Passage Ranking: Accurate translation supports multilingual passage ranking , letting fragments of translated text rank globally.

Update Score & Freshness: Frequent updates of translated content reinforce update score , signaling trust to search engines.

Final Thoughts on Machine Translation

From Statistical MT to the Transformer revolution, MT has progressed from phrase tables to contextual embeddings that capture meaning across languages.

For NLP, it demonstrates the power of representation learning. For SEO, it enables global expansion — ensuring that topical coverage, entity connections, and semantic structures are faithfully preserved across linguistic boundaries.

Machine Translation is no longer just about converting words. It’s about building a multilingual semantic ecosystem that reinforces authority, trust, and global reach.

Frequently Asked Questions (FAQs)

Is SMT still relevant today?

Yes, in constrained domains or when interpretability is required. But for most tasks, Transformers dominate.

Which Transformer MT systems stand out?

Marian for open-source, NLLB-200 for multilingual coverage, and SeamlessM4T for speech + text.

How does MT affect SEO?

It ensures multilingual consistency, strengthens entity graphs , and reinforces topical coverage across languages.

What metrics best evaluate MT quality?

BLEU is common, but COMET and human evaluation better capture semantic relevance .

Want to Go Deeper into SEO?

Explore more from my SEO knowledge base:

▪️ SEO & Content Marketing Hub — Learn how content builds authority and visibility

▪️ Search Engine Semantics Hub — A resource on entities, meaning, and search intent

▪️ Join My SEO Academy — Step-by-step guidance for beginners to advanced learners

Whether you’re learning, growing, or scaling, you’ll find everything you need to build real SEO skills.

Feeling stuck with your SEO strategy?

If you’re unclear on next steps, I’m offering a free one-on-one audit session to help and let’s get you moving forward.

Download My Local SEO Books Now!

Table of Contents

Toggle